Example: Character Recognition#

We’ll apply the ideas we just learned to a neural network that does character recognition using the MNIST database.

import random

import numpy as np

import matplotlib.pyplot as plt

Reading the data#

MNIST is a set of handwritten digits (0–9) represented as a 28×28 pixel grayscale image.

There are 2 datasets, the training set with 60,000 images and the test set with 10,000 images. We will use a version of the data that is provided as CSV files:

Each line of these files provides the answer (i.e., what the digit is) as the first column and then the next 784 columns are the pixel values.

We’ll write a class to managed this data.

Note

Some sources suggest that the data be scaled to fall in [0.01,1] instead of [0, 1] so all of the weights are used for each digit (none get multiplied by 0). This doesn’t seem to matter much here.

A TrainingDigit provides a scaled floating point representation of the image as a 1D array (.scaled) as well as the correct answer (.num)

and categorical data that is used to represent the answer from the neural network—a 10 element array of 1s and 0s. It also provides a method to plot the data.

class TrainingDigit:

"""a handwritten digit from the MNIST training set"""

def __init__(self, raw_string):

"""we feed this a single line from the MNIST data set"""

self.raw_string = raw_string

# make the data range from 0 to 1

_tmp = raw_string.split(",")

self.scaled = np.asarray(_tmp[1:], dtype=np.float32)/255.0

# the correct answer

self.num = int(_tmp[0])

# the output for the NN as a bit array

self.out = np.zeros(10, dtype=np.float32)

self.out[self.num] = 1.0

def plot(self, ax=None, output=None):

"""plot the digit"""

if ax is None:

fig, ax = plt.subplots()

ax.imshow(self.scaled.reshape((28, 28)),

cmap="Greys", interpolation="nearest")

if output is not None:

dstr = [f"{n}: {v:6.4f}" for n, v in enumerate(output)]

ostr = f"correct digit: {self.num}\n"

ostr += " ".join(dstr[0:5]) + "\n" + " ".join(dstr[5:])

plt.title(f"{ostr}", fontsize="x-small")

An UnknownDigit is like a TrainingDigit but it also provides a method to check if our prediction from the network is correct.

class UnknownDigit(TrainingDigit):

"""A digit from the MNIST test database. This provides a method to

compare a NN result to the correct answer

"""

def __init__(self, raw_string):

super().__init__(raw_string)

self.out = None

def interpret_output(self, out):

"""return the prediction from the net as an integer"""

return np.argmax(out)

def check_output(self, out):

"""given the output array from the NN, return True if it is

correct for this digit"""

return self.interpret_output(out) == self.num

Now we’ll read in the data and store the training and test sets in separate lists. We store the files as zipped files, so we need to unzip first.

import zipfile

training_set = []

with zipfile.ZipFile("mnist_train.csv.zip") as zf:

with zf.open("mnist_train.csv") as f:

for line in f:

training_set.append(TrainingDigit(line.decode("utf8").strip("\n")))

len(training_set)

60000

test_set = []

with zipfile.ZipFile("mnist_test.csv.zip") as zf:

with zf.open("mnist_test.csv") as f:

for line in f:

test_set.append(UnknownDigit(line.decode("utf8").strip("\n")))

len(test_set)

10000

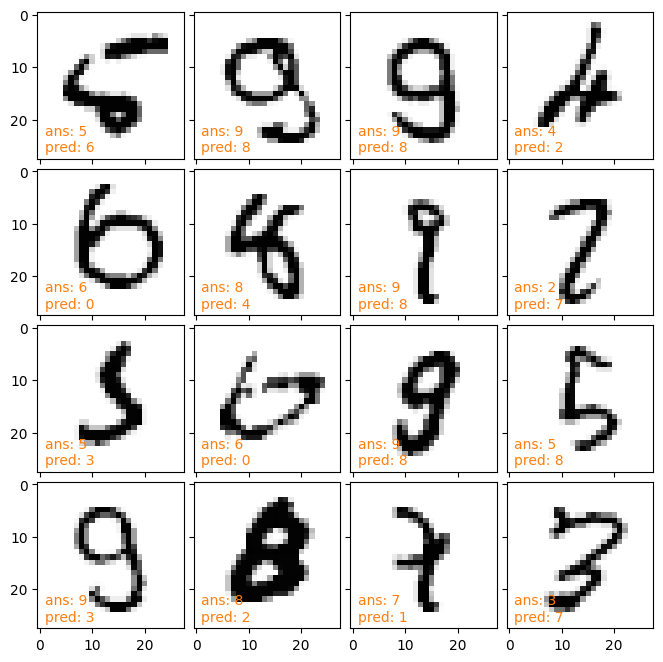

Examining the data#

Let’s look at the first few digits in the training set

from mpl_toolkits.axes_grid1 import ImageGrid

fig = plt.figure(1)

grid = ImageGrid(fig, 111,

nrows_ncols=(4, 4),

axes_pad=0.1)

for i, ax in enumerate(grid):

training_set[i].plot(ax=ax)

Here’s what the scaled pixel values look like—this is what will be fed into the network as input

training_set[0].scaled

array([0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.01176471, 0.07058824, 0.07058824,

0.07058824, 0.49411765, 0.53333336, 0.6862745 , 0.10196079,

0.6509804 , 1. , 0.96862745, 0.49803922, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.11764706, 0.14117648, 0.36862746, 0.6039216 ,

0.6666667 , 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.99215686, 0.88235295, 0.6745098 , 0.99215686, 0.9490196 ,

0.7647059 , 0.2509804 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.19215687, 0.93333334,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.9843137 , 0.3647059 ,

0.32156864, 0.32156864, 0.21960784, 0.15294118, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.07058824, 0.85882354, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.7764706 , 0.7137255 ,

0.96862745, 0.94509804, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.3137255 , 0.6117647 , 0.41960785, 0.99215686, 0.99215686,

0.8039216 , 0.04313726, 0. , 0.16862746, 0.6039216 ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.05490196,

0.00392157, 0.6039216 , 0.99215686, 0.3529412 , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.54509807,

0.99215686, 0.74509805, 0.00784314, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.04313726, 0.74509805, 0.99215686,

0.27450982, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.13725491, 0.94509804, 0.88235295, 0.627451 ,

0.42352942, 0.00392157, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.31764707, 0.9411765 , 0.99215686, 0.99215686, 0.46666667,

0.09803922, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.1764706 ,

0.7294118 , 0.99215686, 0.99215686, 0.5882353 , 0.10588235,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.0627451 , 0.3647059 ,

0.9882353 , 0.99215686, 0.73333335, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.9764706 , 0.99215686,

0.9764706 , 0.2509804 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.18039216, 0.50980395,

0.7176471 , 0.99215686, 0.99215686, 0.8117647 , 0.00784314,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.15294118,

0.5803922 , 0.8980392 , 0.99215686, 0.99215686, 0.99215686,

0.98039216, 0.7137255 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.09411765, 0.44705883, 0.8666667 , 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.7882353 , 0.30588236, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.09019608, 0.25882354, 0.8352941 , 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.7764706 , 0.31764707,

0.00784314, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.07058824, 0.67058825, 0.85882354,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.7647059 ,

0.3137255 , 0.03529412, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.21568628, 0.6745098 ,

0.8862745 , 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.95686275, 0.52156866, 0.04313726, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.53333336, 0.99215686, 0.99215686, 0.99215686,

0.83137256, 0.5294118 , 0.5176471 , 0.0627451 , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. ], dtype=float32)

and here’s what the categorical output looks like—this will be what we expect the network to return

training_set[0].out

array([0., 0., 0., 0., 0., 1., 0., 0., 0., 0.], dtype=float32)

Our network#

Now we can write our neural network class. We will include a single hidden layer.

Tip

Precision can make a big difference in the speed. We probably don’t need 64-bit (double) precision, so we’ll do 32-bit here. Some machine learning libraries use even more reduced precision (16-bit floating point).

class NeuralNetwork:

"""A neural network class with a single hidden layer."""

def __init__(self, input_size=1, output_size=1, hidden_layer_size=1):

# the number of nodes/neurons on the output layer

self.N_out = output_size

# the number of nodes/neurons on the input layer

self.N_in = input_size

# the number of nodes/neurons on the hidden layer

self.N_hidden = hidden_layer_size

# we will initialize the weights with Gaussian normal random

# numbers centered on 0 with a width of 1/sqrt(n), where n is

# the length of the input state

rng = np.random.default_rng()

# A is the set of weights between the hidden layer and output layer

self.A = np.empty((self.N_out, self.N_hidden), dtype=np.float32)

self.A[:, :] = rng.normal(0.0, 1.0/np.sqrt(self.N_hidden), self.A.shape)

# B is the set of weights between the input layer and hidden layer

self.B = np.empty((self.N_hidden, self.N_in), dtype=np.float32)

self.B[:, :] = rng.normal(0.0, 1.0/np.sqrt(self.N_in), self.B.shape)

def g(self, xi):

"""our sigmoid function that operates on the hidden layer"""

return 1.0/(1.0 + np.exp(-xi))

def train(self, training_data, n_epochs=1, learning_rate=0.1):

"""Train the neural network by doing gradient descent with back

propagation to set the matrix elements in B (the weights

between the input and hidden layer) and A (the weights between

the hidden layer and output layer)

"""

print(f"size of training data = {len(training_data)}")

for i in range(n_epochs):

print(f"epoch {i+1} of {n_epochs}")

random.shuffle(training_data)

for n, model in enumerate(training_data):

# make the input and output data one-dimensional

x = model.scaled.reshape(self.N_in, 1)

y = model.out.reshape(self.N_out, 1)

# propagate the input through the network

z_tilde = self.g(self.B @ x)

z = self.g(self.A @ z_tilde)

# compute the errors (backpropagate to the hidden layer)

e = z - y

e_tilde = self.A.T @ (e * z * (1 - z))

# corrections

dA = -2 * learning_rate * e * z * (1 - z) @ z_tilde.T

dB = -2 * learning_rate * e_tilde * z_tilde * (1 - z_tilde) @ x.T

self.A[:, :] += dA

self.B[:, :] += dB

def predict(self, model):

""" predict the outcome using our trained matrix A """

y = self.g(self.A @ (self.g(self.B @ model.scaled)))

return y

Create our neural network

input_size = len(training_set[0].scaled)

output_size = len(training_set[0].out)

net = NeuralNetwork(input_size=input_size, output_size=output_size, hidden_layer_size=50)

Training#

Now we can train

net.train(training_set, n_epochs=10)

size of training data = 60000

epoch 1 of 10

epoch 2 of 10

epoch 3 of 10

epoch 4 of 10

epoch 5 of 10

epoch 6 of 10

epoch 7 of 10

epoch 8 of 10

epoch 9 of 10

epoch 10 of 10

Let’s see what our accuracy rate is

n_correct = 0

for model in test_set:

res = net.predict(model)

if model.check_output(res):

n_correct += 1

print(f"accuracy is {n_correct / len(test_set)}")

accuracy is 0.9647

So we are about 97% accurate. We can try to improve this by training with more epochs or using a bigger hidden layer. We might also try experimenting with other activation functions.

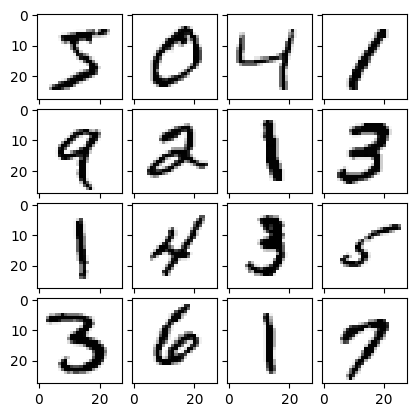

Wrong digits#

Let’s look at some of the digits we get wrong

from mpl_toolkits.axes_grid1 import ImageGrid

fig = plt.figure(1, (8, 8))

grid = ImageGrid(fig, 111,

nrows_ncols=(4, 4),

axes_pad=0.1)

num_wrong = 0

for model in test_set:

res = net.predict(model)

if not model.check_output(res):

model.plot(ax=grid[num_wrong])

grid[num_wrong].text(0.05, 0.05,

f"ans: {model.num}\npred: {model.interpret_output(res)}",

transform=grid[num_wrong].transAxes,

color="C1", zorder=100)

num_wrong += 1

if num_wrong == len(grid):

break